WebGPU + WebLLM: Running LLMs in the Browser

What is WebGPU? #

WebGPU is a web standard that enables native GPU execution on browsers, allowing for faster graphics and computations. WebGPU is the successor to WebGL, which was designed for graphics rendering, but WebGPU has first-class support for GPGPU computations

WebLLM is a new project that runs large language models fully inside the browser, reducing costs and enhancing personalization and privacy.

Running large language models in the browser is made possible by WebLLM and WebGPU. WebLLM is a project that runs large language models fully inside the browser, while WebGPU enables native GPU execution on browsers, allowing for faster computations

LLMs in the browser #

With WebLLM we can compile open source large language models to work with WebGPU and run entirely in the browser:

Here are some links to some of models compiled for WebGPU and Web Assembly:

Here is a great guide on how to compile different models to WebGPU.

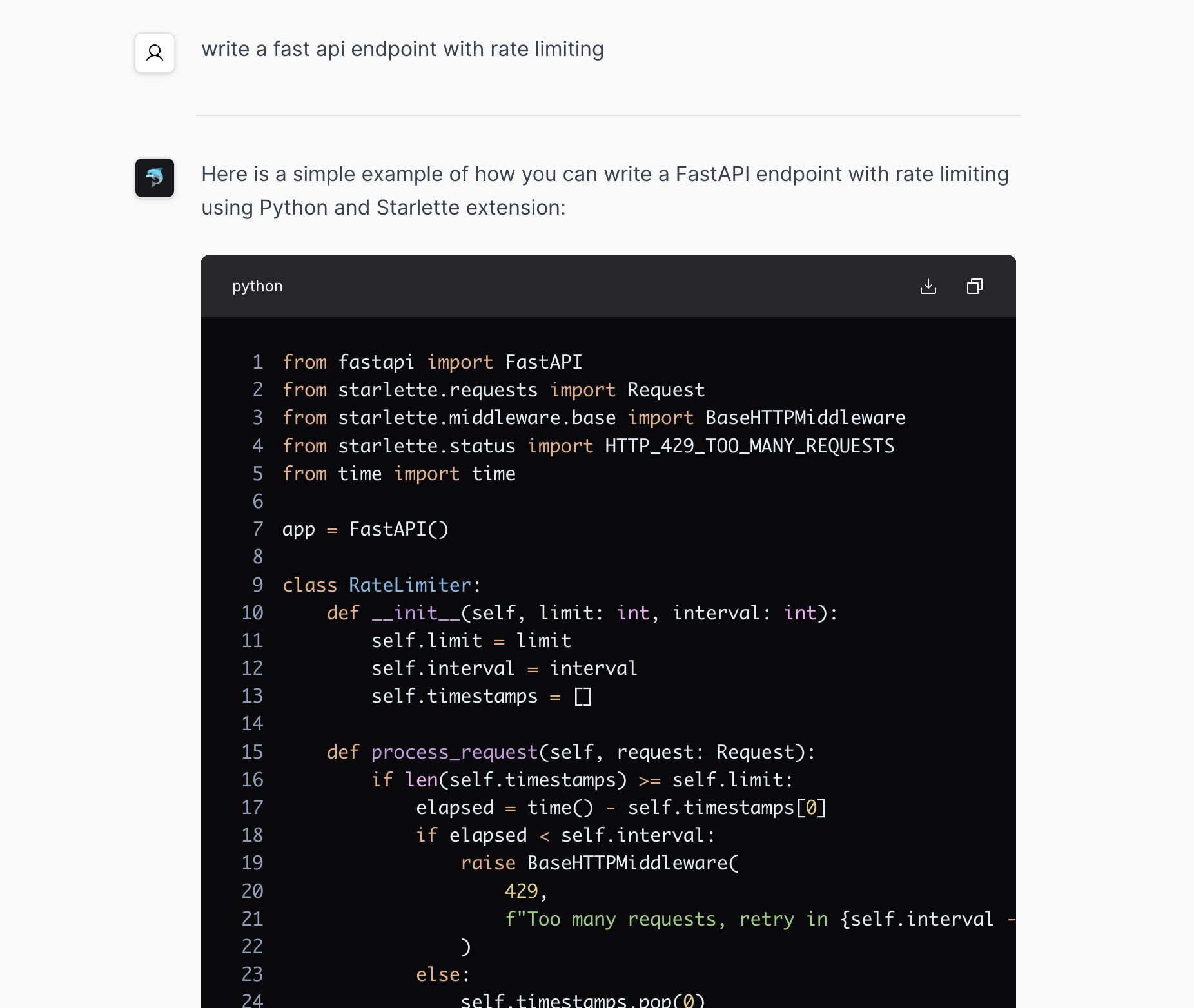

How we can run them in the browser #

Once we have a model compiled for Web GPU, we can use WebLLM to run it for inference. Here is a really cool demo where you can run the demo at wasmai.vercal.app.

We are going to continue compiling new models as they come out and hopefully add them to the free tools for you to try 😊!

Whisper Turbo #

Whisper Turbo is another big project that brings Whisper to the browser. It can transcribe audio at about 20x realtime speed. Super cool, that you can have a performant model, working totally locally with no internet connection!

You can see their GitHub repo as well!